Natural Algorithms¶

The course is derived from the course Biological Circuit Design by Michael Elowitz and Justin Bois, 2020 at Caltech.

The original course material has been changed by Matthias Fuegger and Thomas Nowak.

About this course¶

Goal of this course is to provide you with means to understand, analyze, and design basic genetic circuits in cells. In chapter 1 we start with basic principles of genetic control and discuss:

- cell principles

- circuit principles

- mathematical models to describe genetic circuits

- steady state and dynamic behavior

- separation of time scales

- regulation principles

- activation & repression

- their implications for the design of new circuits

Coding setup¶

We will stick to Python 3 code that can be run stand-alone at your computer whenever possible. First let's load some libraries we will need for the course.

# source: http://be150.caltech.edu/2020/content/lessons/01_intro_to_circuit_design.html

# imports

import numpy as np

import matplotlib.pyplot as plt

%matplotlib inline

1. Introduction¶

Cells¶

A cell is a membrane-bound collection of interacting molecules. Interaction can change the composition of molecules and their properties. For example, two interacting molecules can form a dimer upon interaction.

At an abstract level, these processes can be interpreted as a cell

- sensing its environment

- processing the stimuli

- adapting its behavior, e.g., changing its growth rate, secreting molecules, or moving away from a repellent

Models¶

To describe (aspects of) a cell's behavior models on different levels of abstraction have been used. During the course we will discuss some of sees. An example is Chemical Reaction Networks (CRNs) that describe the evolution of species in a cell via reactions that occur among the species. Reactions modify, generate, and consume other species. While such a (chemically inspired) model may seem mostly relevant at a molecular level, it can very well be used abstract levels. More on this later in the course.

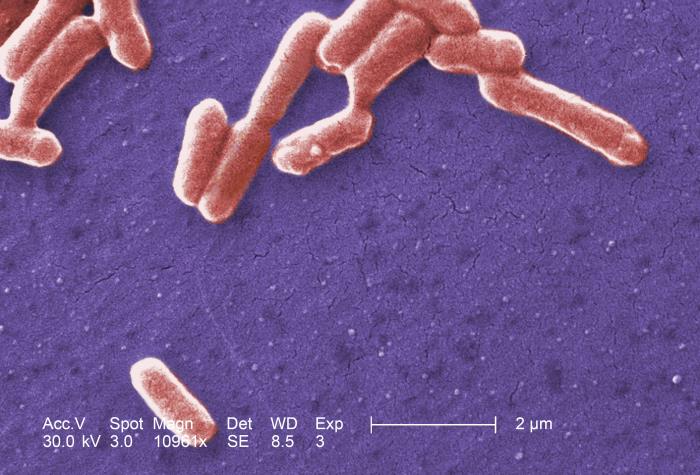

A model organism: the bacteria Escherichia coli (E. coli)¶

While many of the concepts covered in the course are general to a wide class of cells, we will often refer to E. coli as an example. E. coli is a gram negative bacterium. It has an inner and outer membrane.

Their shape is approximately cylindrical with round caps (rod shaped) with a radius of $0.5\mu$ and length of $2\mu m$. Their volume of about $1 \mu m^3$. E. coli cultures grow by cell division with a rate depending on several factors like strain and available nutrients in the growth medium. During fast growth cells duplicate about every 20 min.

Below is a schematic of its membranes from the inside of the cell (cytoplasm, bottom in figure) to the outside (top in the figure).

Recently microscopy has made significant advances in capturing high-resolution images of parts of cells. One such image you can find in Matias, Valério RF, et al., J. of Bacteriology, 2020 Fig.4. of the paper where membranes are nicely visible.

The genome of E. coli is a double stranded circular DNA and varies considerably by strain. For MG1655 (a K-12 substrain) it is 4,641,652 bp long and can be found in the ncbh database.

Crowded volume¶

The cytoplasm of a cell should be imagined as a crowded space in contrast to molecules freely floating around. To get an impression how densely packed it is, see this simulation of the cytoplasm of E. coli during 15ms by McGuffee and Elcock.

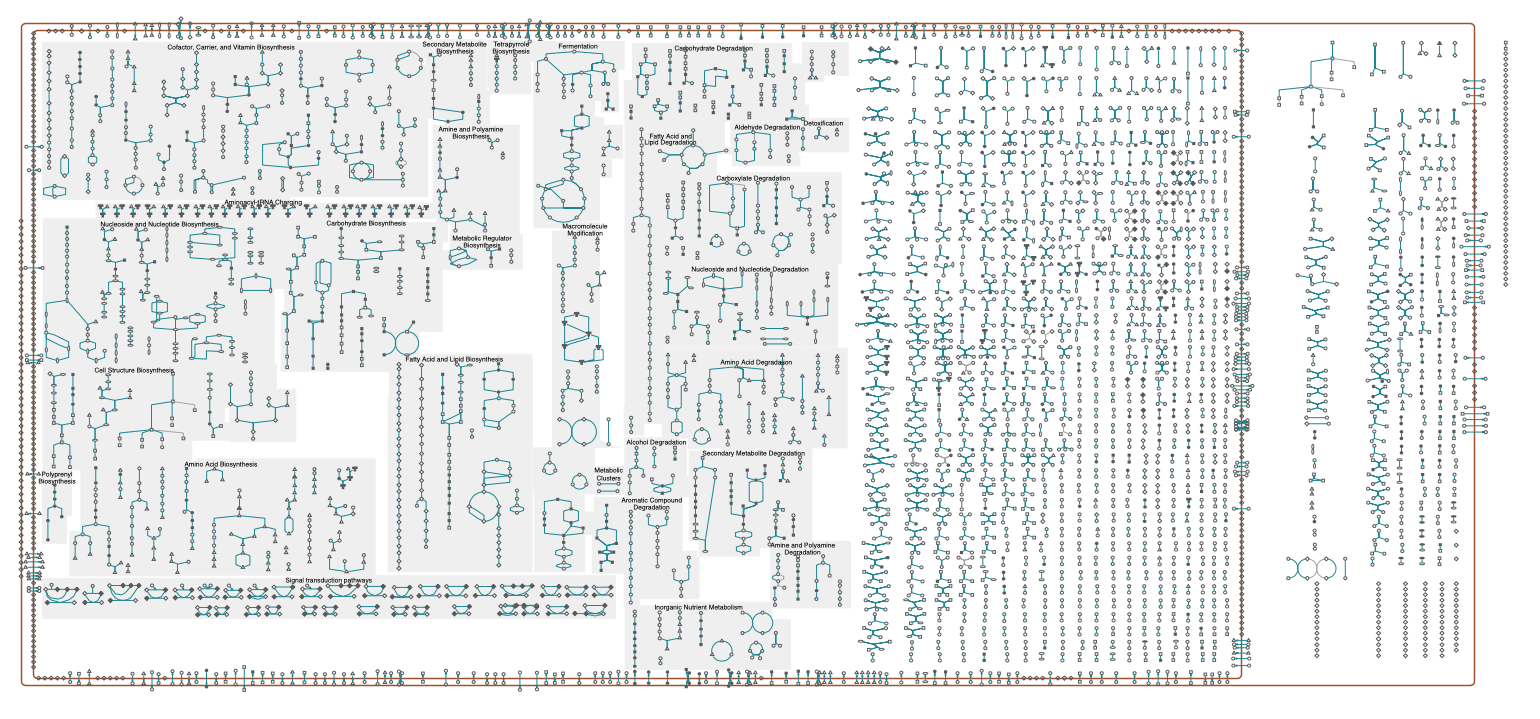

Pathways in E. coli¶

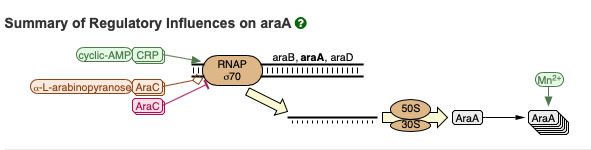

Reactions are typically grouped together into pathways, that describe reactions for a certain cell function. Examples are the modification of molecules by enzymes along metabolic pathways as well as transcription and translation in genetic pathways. An overview of reactions grouped into pathways is shown in the figure below. An interactive schematic is available here by ecocyc and lists of pathways available, e.g., by biocyc and kegg.

Below is an excerpt of reactions from a genetic pathway that includes the transcription of the araA gene. See here for an interactive version. These reations will play a role later on in the course.

Circuits¶

We may view a pathway as a system that comprises of:

- a set of input species

- a set of output species

- a set of internal species

- a set of reactions that describe the interactions among all these species

This is very similar to what is called a circuit which comprises of:

- a set of input ports/nodes

- a set of output ports/nodes

- a set of internal nodes

- a set of electronic components that relate signals at the nodes to each other.

We will thus refer to a pathway as a biological circuit. There are natural circuits that have naturally evolved and synthetic biological circuits that have been engineered into the cell. Synthetic circuits, however, use components of naturally evolved circuits that are then either used directly or adapted for synthetic circuits.

Signals¶

An execution or signal trace of an electronic circuit is given by the voltage $u(t)$ at each of its nodes with respect to a ground potential over time $t$. An execution not only depends on the circuit, but also on the circuit environment, interacting with the circuit via its input and output ports - in the end we want the circuit to react differently to different inputs.

A digital signal with values in $\{0,1\}$ is sometimes more convenient when designing, analyzing, and simulating a circuit and it is obtained from the analog signal by discretizing at a threshold voltage.

Signals in a biological circuit are typically given as concentrations or counts (in a fixed volume) of species over time. We will see that digital abstractions will also be used in biological circuits.

However, we stress that signals are not necessarily voltages and concentrations. For example, in electrical circuits, it is sometimes more convenient to use the current $i(t)$ through a certain connection between nodes as the signal. We may even mix signal domains and use current for the input signal and voltage for the output signal or vice versa. Likewise, current-analogs are used in biological circuits as signals. Examples are production rates of species (Aoki et al., Nature 2019) and the number of RNA polymerases (RNAPs) that pass a certain DNA bp per time (RNAP flux) (Kelly et al., J. of Biological Engineering 2009), (Borujeni et al., Nature comm. 2020).

Circuit components¶

Examples for analog electronic components are the resistor and the capacitor, example for digital components (gates) are the inverter (INV), a NAND gate, and a NOR gate:

Let us start with two analog components. The resistor with resistance $R$ between two of the circuit's nodes $p$ and $q$ relates voltage $u_{pq}(t)$ and current $i_{pq}(t)$ accross it by $$ u_{pq}(t) = R \cdot i_{pq}(t) \enspace. $$

The capacitor with capacitance $C$ relates voltage $u_{pq}(t)$ and current $i_{pq}(t)$ across it by $$ i_{pq}(t) = C \cdot \frac{du_{pq}(t)}{dt} \enspace. $$

These analog components do not have dedicated input and output ports. They are undirected and simply restrict signals among the ports they connect in certain ways.

Thus, a priori, such a circuit thus does not have a direction. The choice of which ports are inputs and which ones outputs is irrelevant for the executions it produces. It is rather a convention to tell the circuit's user which node voltages we propose to vary (via the environment) to obtain a certain intended behavior at the output nodes (given that the output's environment does not interfere with this).

Additionally to the circuit components, we also need to include the Kirchhoff current law. Let $p$ be a node in the circuit and $N(p)$ the set of neighboring nodes. Then, $$ \sum_{q \in N(p)} i_{qp}(t) = 0\enspace. $$ Further, the Kirchhoff voltage law states that along a closed path $C$ of edges in the circuit, $$ \sum_{e \in C} u_{e}(t) = 0\enspace. $$

Directed vs undirected¶

This is in contrast to a directed software-design components. When implementing a function that computes $f(x) = x/2$, inputs and outputs are not interchangeable. We write

def f(x: float) -> float:

return x/2

f(1.0)

Decoupling¶

Another central property of the above code is decoupling. We may, e.g., write

x = 1.0

[f(x), (f(x), f(x)), 1/1 * f(x), f(x)/1]

and would be surprised to obtain different results for these function calls. This is in general not the case for circuits: using the same "input" $x$ for several circuit instances may change the input node's voltage.

Digital circuit design intends to provide directed and decoupled circuit components (to a certain extent) to the designer. We will discuss these in a follow-up lecture.

Example¶

Let's look at a small example, an RC circuit, shown in the figure below.

We have marked the ground potential with $0$V, a dedicated input node and an output node. Input and output signals are in terms of voltage.

The first assumption made is one about the circuit's environment: we have assumed that the output current is $0$A. While this may be true for some circuits, or at least approximately hold since output currents are very low, it may be violated for others. For example, we cannot simply compose two such circuits sequentially: the assumption would most a priori be violated with a positive input current of the second instance.

For the input environment, we assume that the input voltage was $0$V all before time $0$, and has jumped to $1$V at time $0$ where it remains.

We have: \begin{align} u_R(t) &= R \cdot i_R(t) \qquad&&\text{(resistor rule)}\\ i_C(t) &= C \cdot \frac{du_{out}(t)}{dt} \qquad&&\text{(capacitor rule)}\\ u_{in}(t) &= u_R(t) + u_{out}(t) &&\text{(Kirchhoff voltage)}\\ i_{R}(t) &= i_C(t) &&\text{(Kirchhoff current)}\\ \end{align}

and thus

\begin{align} \frac{du_{out}(t)}{dt} &= \frac{1}{RC} \left( u_{in}(t) - u_{out}(t) \right)&& \\ u_{out}(0) &= 0 &&\text{(input environment)} \end{align}The change of the output $\frac{du_{out}(t)}{dt}$ is thus proportional to the difference of input and output $u_{in}(t) - u_{out}(t)$. If $u_{in}(t) > u_{out}(t)$, the output is corrected to increase and vice versa for $u_{in}(t) < u_{out}(t)$.

For our assumptions of an input at $1$V, we observe that the following equation fulfills the above differential equation and initial conditions \begin{align} u_{out}(t) = 1 - e^{-\frac{t}{RC}} \enspace. \end{align} Let's plot it over time:

# compute

t = np.linspace(0, 10, 200)

R = 1

C = 1

uout = 1 - np.exp(-t/(R*C))

# build plot

plt.figure(figsize=(5,3))

plt.title('output $u_{out}$ over time')

plt.xlabel("time $t$ (s)")

plt.ylabel("$u_{out}$ (V)")

plt.plot(t, uout, linewidth=2, color="tomato", label="$u_{out}$")

plt.ylim(0,1.1)

plt.legend()

plt.show()

First steps: Develop intuition for the simplest gene regulation circuits¶

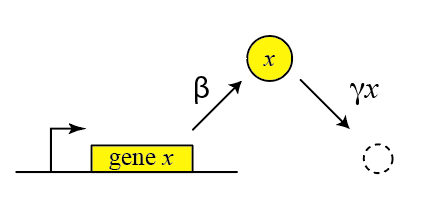

Let's turn to biological circuits. We will start by thinking about a single gene, coding for a single corresponding protein. This minimal example will allow us to develop intuition for the dynamics of the simplest gene regulations systems and lay out a procedure that we can further extend to analyze more complex circuits.

What protein concentration will be produced by a gene x? We assume that the gene will be transcribed to mRNA and those mRNA molecules will in turn be translated to produce proteins, such that new proteins are produced at a total rate $\beta$ molecules per unit time. The $x$ protein does not simply accumulate over time. It is also removed both through active degradation as well as dilution as cells grow and divide. For simplicity, we will assume that both processes tend to reduce protein concentrations through a simple first-order process, with a rate constant $\gamma$.

The approach we are taking can be described as "phenomenological modeling." We do not explicitly represent every underlying molecular step. Instead, we assume those steps give rise to "coarse grained" relationships that we can model in a manner that is independent of many underlying molecular details. The test of this approach is whether it allows us to understand and experimentally predict the behavior of real biological systems. See Wikipedia's article on phenomenological models and this article by Jeremy Gunawardena.

Thus, we can draw a diagram of our simple gene, x, with its protein being produced and removed (dashed circle):

Here, protein production occurs at rate $\beta$ and degradation+dilution at rate $\gamma x$. We can then write down a simple ordinary differential equation describing these dynamics:

\begin{align} &\frac{dx}{dt} = \mathrm{production - (degradation+dilution)} \\[1em] &\frac{dx}{dt} = \beta - \gamma x \end{align}where

\begin{align} \gamma = \gamma_\mathrm{dilution} + \gamma_\mathrm{degradation} \end{align}A note on effective degradation rates: When cells are growing, protein is removed through both degradation and dilution. For stable proteins, dilution dominates. For very unstable proteins, whose half-life is much smaller than the cell cycle period, dilution may be negligible. In bacteria, mRNA half-lives (1-10 min, typically) are much shorter than protein half-lives. In eukaryotic cells this is not necessarily true (mRNA half-lives can be many hours in mammalian cells).

Relation to electronic circuits¶

Let's for a moment stop and again look at the equation of the RC circuit for the case $u_{in}(t) = c$ for $t \geq 0$. Both equations look deceptively similar:

\begin{align} \frac{du_{out}(t)}{dt} &= \frac{c}{RC} - \frac{1}{RC} \cdot u_{out}(t) && \text{ (RC circuit)}\\ \frac{dx}{dt} &= \beta - \gamma x && \text{ (gene regulation)} \end{align}In fact the electronic circuit behaves the same as the genetic circuit if $\beta = \frac{c}{RC}$ and $\gamma = \frac{1}{RC}$; a strange coincidence?

While a relation between the circuits is not immediate, let's consider a different, slightly adapted, electronic circuit shown below.

Now the input signal is the input current $i_{in}(t)$ and the output signal the output voltage $u_{out}(t)$; at least for the moment.

We have, \begin{align} i_C(t) &= C \cdot \frac{du_{out}(t)}{dt} &&\text{ (capacitor rule)}\\ i_R(t) &= \frac{1}{R} \cdot u_{out}(t) &&\text{ (resistor rule)}\\ i_{in}(t) &= i_C(t) + i_R(t) && \text{ (Kirchhoff current)} \end{align}

and thus \begin{align} C \cdot \frac{du_{out}(t)}{dt} &= \cdot i_{in}(t) - \frac{1}{R} \cdot u_{out}(t) \end{align}

Let us change the output even further to the charge $Q(t)$ that is stored in the capacitor at time~$t$. For a capacitor with voltage $u(t)$ across it, it holds that $$ Q(t) = C \cdot u(t) \enspace. $$

Calling the output signal $Q_{out}$, we thus have, \begin{align} \frac{dQ_{out}(t)}{dt} &= i_{in}(t) - \frac{1}{RC} \cdot Q_{out}(t) \enspace. \end{align}

Let's compare: the input current $i_{in}$ now corresponds to the production rate $\beta$, the output charge $Q_{out}$ to the protein counts (per volume), and $\frac{1}{RC}$ to the degradation and dilution rate $\gamma$.

Can you intuitively explain the relation between both the electronic and the genetic circuit?

Solving for the steady state¶

Often, one of the first things we would like to know is the concentration of protein under steady state conditions. To obtain this, we set the time derivative to 0, and solve:

\begin{align} &\frac{dx}{dt} = \beta - \gamma x = 0 \\[1em] \Rightarrow \,&x_{\mathrm{st}} = \beta / \gamma \end{align}In other words, the steady-state protein concentration depends on the ratio of production rate to degradation rate.

Including transcription and translation as separate steps¶

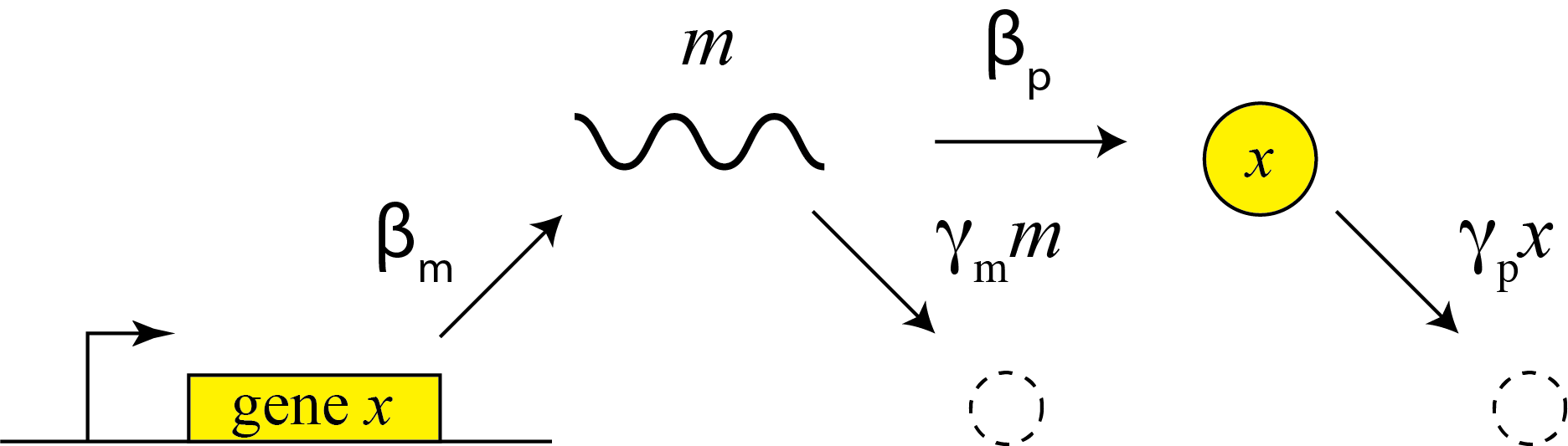

This description does not distinguish between transcription and translation. However, considering both processes separately can be important in more dynamic and stochastic contexts that we will encounter later in the course. To do so, we can simply add an additional variable to represent the mRNA concentration, which is now transcribed, translated to protein, and degraded (and diluted), as shown schematically here:

These reactions can be described by two coupled differential equations for the mRNA (m) and protein (x):

\begin{align} &\frac{dm}{dt} = \beta_m - \gamma_m m, \\[1em] &\frac{dx}{dt} = \beta_p m - \gamma_p x. \end{align}Now, we can determine the steady state mRNA and protein concentrations straightforwardly, by setting both time derivatives to 0 and solving. We find:

\begin{align} &m_\mathrm{st} = \beta_m / \gamma_m, \\[1em] &x_\mathrm{st} = \frac{\beta_p m_\mathrm{st}}{\gamma_p} = \frac{\beta_p \beta_m}{\gamma_p \gamma_m}. \end{align}From this, we see that the steady state protein concentration is proportional to the product of the two synthesis rates and inversely proportional to the product of the two degradation rates.

And this gives us our first design puzzle: the cell could control protein expression level in at least four different ways: It could modulate (1) transcription, (2) translation, (3) mRNA degradation or (4) protein degradation rates (or combinations thereof). Are there tradeoffs between these different options? Are they all used indiscriminately or is one favored in natural contexts?

From gene expression to gene regulation - adding a repressor¶

Life would be simple—perhaps too simple—if genes were simply left "on" all the time. To make things interesting the cell has to regulate them, turning their expression levels lower or higher depending on environmental conditions and other inputs. One of the simplest ways to do this is through repressors. Repressors are proteins that can bind to specific binding sites at or near a promoter to change its activity. Often the strength of their binding is contingent on external inputs. For example, the LacI repressor normally turns off the genes for lactose utilization in E. coli. However, in the presence of lactose in the media, a modified form of lactose binds to LacI, inhibiting its ability to repress its target genes. Thus, a nutrient (lactose) can regulate expression of genes that allow the cell to use it. (For the scientific and historical saga of this seemingly simple system, we recommend the fascinating, wonderful book "The lac operon" by B. Müller-Hill.)

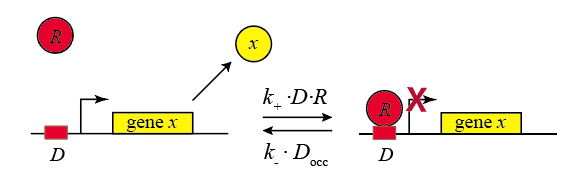

In the following diagram, we label the repressor R.

Within the cell, the repressor binds and unbinds its target site. We assume that the expression level of the gene is lower when the repressor is bound and higher when it is unbound. The mean expression level of the gene is then proportional to the fraction of time that the repressor is unbound.

We therefore compute the "concentration" of DNA sites in occupied or unoccupied states. (Within a single cell an individual site on the DNA is either bound or unbound, but averaged over a population of cells, we can talk about the mean occupancy of the site). Let $D$ be the concentration of unoccupied promoter, $D_\mathrm{occ}$ be the concentration of occupied promoter, and $D_\mathrm{tot}$ be the total concentration of promoter, with $D_\mathrm{tot} = D + D_\mathrm{occ}$, as required by conservation of mass.

We can also assume a separation of timescales between the rates of binding and unbinding of the repressor to the DNA binding site are both often fast compared to the timescales over which mRNA and protein concentrations vary. (Careful, however, in some contexts, such as mammalian cells, this is not true.)

All we need to know is the mean concentration of unoccupied binding sites, $D/D_\mathrm{tot}$.

\begin{align} k_+ D R &= k_- D_\mathrm{occ} \\[1em] D_\mathrm{occ} &= D_\mathrm{tot} - D \\[1em] \frac{D}{D_\mathrm{tot}} &= \frac{1}{1+R/K_\mathrm{d}}, \end{align}where $K_\mathrm{d} = k_- / k_+$. From this, we can write the production rate as a function of repressor concentration,

\begin{align} \beta(R) = \beta_0 \frac{D}{D_\mathrm{tot}} = \frac{\beta_0}{1+R/K_\mathrm{d}}. \end{align}Properties of the simple binding curve¶

This is our first encounter with a soon to be familiar function. Note that this function has two parameters: $K_\mathrm{d}$ specifies the concentration of repressor at which the response is reduced to half its maximum value. The coefficient $\beta_0$ is simply the maximum expression level, and is a parameter that multiples the rest of the function.

# Build theoretical curves

R = np.linspace(0, 10, 200)

b0 = 1

Kd = 1

beta = b0 / (1 + R / Kd)

init_slope = -R + 1

# Build plot

plt.figure(figsize=(5,3))

plt.title('Kd = 1, β₀ = 1')

plt.xlabel("R")

plt.ylabel(r"$\beta(R)$")

plt.plot(R, beta, linewidth=2, color="tomato", label="β(R)")

plt.plot(R, init_slope, linewidth=2, color="orange", label="initial slope")

plt.ylim(0,1)

plt.legend()

plt.show()

Gene expression can be leaky¶

As an aside, we note that in real life, many genes never get repressed all the way to zero expression, even when you add a lot of repressor. Instead, there is a baseline, or "basal", expression level that still occurs. A simple way to model this is by adding an additional constant term, $\alpha_0$ to the expression

\begin{align} \beta(R) = \alpha_0 + \beta_0 \frac{D}{D_\mathrm{tot}} = \alpha_0 + \frac{\beta_0}{1+R/K_\mathrm{d}}. \end{align}Given the ubiquitousness of leakiness, it is important to check that circuit behaviors do not depend on the absence of leaky expression.

# Build the theoretical curves

R = np.linspace(0, 20, 200)

b0 = 1

Kd = 1

a0 = 0.25

beta = a0 + b0 / (1 + R / Kd)

# Build plot

plt.figure(figsize=(5,3))

plt.title("Kd = 1, β₀ = 1, a₀ = 0.25")

plt.xlabel("R")

plt.ylabel(r"$\beta(R)$")

plt.plot(R, beta,

linewidth=2,

color="tomato",

label="β(R)")

plt.plot([R[0], R[-1]], [a0, a0],

linewidth=2,

color="orange",

label="basal expression")

plt.ylim(0, beta.max())

plt.legend()

plt.show()

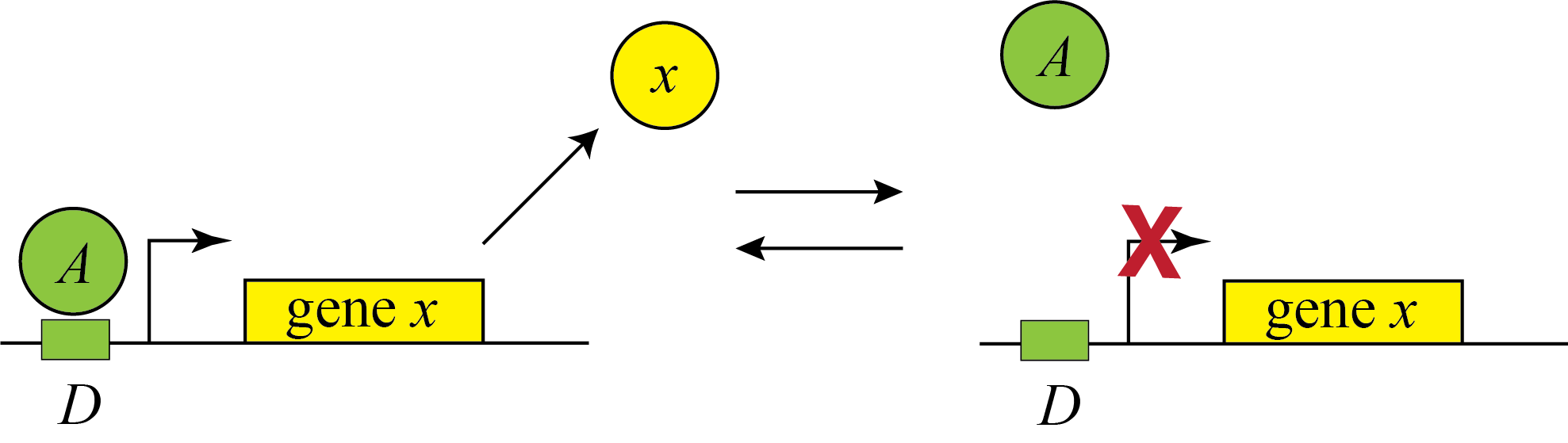

Activation¶

Genes can be regulated by activators as well as repressors. Treating the case of activation just involves switching the state that is actively expressing from the unbound one to the one bound by the protein (now called an Activator). And, just as the binding of a repressor to DNA can be modulated by small molecule inputs, so too can the binding of the activator be modulated by binding to small molecules. In bacteria, one of many examples is the arabinose regulation system.

This produces the opposite, mirror image response compared to repression, shown below with no leakage.

# 4

A = np.linspace(0, 20, 200)

beta_A = A / (1 + A)

beta_R = 1 / (1 + R)

# Build plot

plt.figure(figsize=(5,3))

plt.title(r"$\beta$ function")

plt.xlabel("A/Kd, R/Kd")

plt.ylabel("β/β₀")

plt.plot(A, beta_A,

linewidth=2,

color="blue",

label="β(A)")

plt.plot(R, beta_R,

linewidth=2,

color="tomato",

label="β(R)")

plt.ylim(0, 1)

plt.legend()

plt.show()

Activator vs. Repressor–which to choose?¶

And now at last we have reached our first true 'design' question: The cell has at least two different ways to regulate a gene: using an activator or using a repressor. Which should it choose? Which would you choose if you were designing a synthetic circuit? Why? Are they completely equivalent ways to regulate a target gene? Is one better in some or all conditions? How could we know?

These questions were posed in a study by Michael Savageau (PNAS, 1974), who tried to explain the naturally observed usage of activation and repression in bacteria. A different explanation was later developed by Shinar et al (PNAS 2004). We end the lecture with this question - try to think about when and why you would use each type of regulation!

Further reading¶

As starting material we highly recommand open courses like:

- The course Biological Circuit Design at Caltech by Elowitz and Bois. Our course is derived from their this course.

- The course Introduction to Biological Engineering Design at MIT by Kuldell and Endy